The semiconductor industry is experiencing a significant transformation, raising questions about the advantages traditionally associated with Moore’s Law and Dennard scaling. This shift highlights four key trends that intersect with technology, economics, and society. The first trend is the perceived end of Moore’s Law. Analysis indicates that the benefits of advances in semiconductor technology—specifically in terms of cost, energy efficiency, and density—are diminishing (see Table 1). This suggests a departure from the era where technological advances consistently delivered substantial economic and performance improvements. The second trend is the reevaluation of government subsidies. In response to these technological challenges, governments worldwide have allocated significant subsidies to support the development of advanced chip-fabrication facilities. The economic justification for these investments, however, is being increasingly scrutinized, especially when considering the complex dynamics of workforce and ecosystem support that can affect the operational success of these facilities, as well as their time to becoming operational.2,36 The third trend is access to semiconductor technology. Recent policy measures have aimed to regulate access to certain semiconductor technologies based on geopolitical considerations.10,23,25 In short, China is being prevented from accessing 7nm and lower technology.a These measures are designed to address concerns over AI technological supremacy, but also must be understood within the context of the broader industry trend toward diminishing returns from further miniaturization. The fourth and final trend is the role of architectural efficiency. Economic and engineering considerations have prompted a renewed focus on architectural innovation. This trend illustrates how, despite the high costs of the latest technology nodes, thoughtful design at more accessible nodes, such as 12nm, can achieve competitive or even superior performance compared with designs at more advanced nodes, such as 5nm.1,33 This approach is also potentially more sustainable, offering reduced carbon footprints without compromising performance.8 Together, these trends underscore a complex landscape where the relationship between technological scaling, economic viability, and environmental sustainability is being redefined, necessitating a nuanced understanding of the semiconductor industry’s future direction.

| 16nm | 12nm | 7nm | 5nm | 3nm | |

|---|---|---|---|---|---|

| Wafer price | 1.00 | 1.00 | 1.69 | 2.11 | 2.62 |

| Transistor cost | 1.16 | 1.00 | 0.62 | 0.52 | 0.50 |

| Area | 1.16 | 1.00 | 0.40 | 0.22 | 0.14 |

| Power | 1.29 | 1.00 | 0.61 | 0.43 | 0.43 |

| Delay | 1.25 | 1.00 | 0.74 | 0.51 | 0.45 |

In light of these trends, we observe that the relationship between technology scaling and architecture is not well understood. This is especially important to understand for high-capability deep-learning (DL) chips, given the popularity of DL applications. In this article, we seek to answer whether new AI chips can be built at 12nm technology using principles of specialization that match or exceed state-of-the-art (SOTA) chips made at 5nm or lower. We choose 12nm as a reference point because cost scaling beyond 12/10nm is less than 18% for successive nodes (shown in Table 1) and because it corresponds to the technology node at which the China bans come into effect.

In our study, we sought to understand the benefits that technology scaling provides, as well as the benefits architectural innovation can bring over SOTA AI chips. To that end, we introduce the concept of tiled decoupled control and compute (TDCC) architecture, which encapsulates the evolution of AI chip architectures toward matrix engines integrated with decoupled data-movement mechanisms. One instantiation of a TDCC architecture is Galileo, which we use as a tangible example to help concretize our empirical results. Here are the key findings from our study:

It confirms the well-established claim of the architecture field that specialization can provide large benefits also applies to AI training and inference chips, supplanting and superseding the benefits of technology scaling.

Quantifying the role of technology scaling, we find that from 12nm to 3nm, device scaling provides a best-case 2.9x improvement for “typical” DL workloads such as LLMs, when the die size is ≈ 800mm2 at both nodes, contrary to the 8x conventionally expected from technology scaling. From 7nm to 3nm, this benefit is 1.45x.

Setting aside the benefits of technology scaling, and isolating the benefits of architecture alone, we show that an architecture built at 12nm can surpass the performance of state-of-art 7nm (A100) and 5nm (H100) chips, by employing ideas from hardware specialization targeted to AI workloads.

This new paradigm of specialized architecture, which pushes the limits of specialization at mature technology nodes like 12nm, can also improve the total coast of ownership (TCO), carbon footprint,8 and economic productivity of the semiconductor industry—even in regions where newer nodes are available.

Key Questions and Framing

In this section, we first enumerate and explain the nuances behind our two key questions to determine what role technology scaling has independent of architecture. Then we describe the framing of our study in terms of the choice of platforms to compare to and a few of our simplifying assumptions. Finally, we describe our workloads in-depth, the TDCC terminology, and our modeling and simulation methodology.

Questions. There are two key questions we seek to answer. The first is, What is the role of technology? Here we want to understand what the benefits are from technology scaling and how and whether new transistors can be effectively exploited by new chip architecture. The second question is, What is the role of architecture? We want to understand whether architectural changes are possible that can outperform SOTA chip implementations built at 7nm and 5nm. We define and then evaluate a practical TDCC architecture, Galileo, to empirically answer this question.

Framing.

SOTA platform choice. Nvidia GPUs are the mainstream and practical option for DL training and, going by Nvidia chip-scarcity reports, probably for datacenter inference as well. Hence, we focus on matching that performance. In this work, we focus on big-iron datacenter chips, ignoring edge inference at the sub-200W regime. Figure 1 illustrates the chip-development process from the register transfer level (RTL) phase to chip bringup, showing a typical timeline of about 18 months. It showcases that creating new chips is a manageable effort that does not require years of development, making it accessible for diverse entities in the tech industry.

Numerics. We assume the stability issues of FP16 can be addressed with the modest addition of FP32 capability without a substantial increase in area. In particular, we assume FP64 compute capability is not needed in substantial amounts.

System scale. We focus on single-node training, with the observation that techniques for high-performance distributed training are orthogonal to single-node performance. Klenk et al.16 show that perfect all-reduce improves performance by 10% to 40%.

Workload characterization. We base our study on MLPerf, a standard benchmark for machine learning inference and training for hardware platforms,29,38 with application domains including image classification (ResNet50), object detection (SSD-ResNet34), speech recognition (RNN-T), recommendation systems (DLRM), medical image segmentation (UNET), and language transformers (BERT). LLMs like GPT3 and LLama2 have a DL-level architecture similar to BERT.

Table 2 presents a breakdown of the ResNet50 training mode by top contributing framework-level operations by percentage of runtime on V100 and A100 GPUs. Inference results in a similar breakdown for all applications, with backward operations not being present. We observe the operators found in MLPerf can be abstracted into three broad categories: compute operations, such as GEMMs, which often stress the compute resources of a chip; element-wise operations, which perform a small amount of independent computation for each element in a tensor; and index and irregular access operations, which involve retrieving memory through indirect addressing, often performing operations such as reductions or sorting. Based on the input tensor shapes to these operators, as well as the available machine resources such as peak compute, memory bandwidth, and OCN design, operators can become compute heavy, latency heavy, or bandwidth heavy. Table 3 shows a breakdown of MLPerf (training) by percentage of runtime spent in each of these categories of operations for V100 and A100 GPUs. Again, inference is omitted since it is similar to training for all applications.

| Op Name | V100 | A100 |

|---|---|---|

| batch_norm-bwd | 24.60% | 29.11% |

| batch_norm | 15.36% | 21.14% |

| conv-bwd | 28.38% | 20.57% |

| conv | 13.05% | 10.52% |

| add | 6.42% | 6.57% |

| threshold_backward | 5.32% | 5.26% |

| relu | 3.62% | 3.46% |

| max_pool2d_with_indices_backward | 2.42% | 2.35% |

| Other | 0.83% | 1.02% |

| V100 | A100 | |||||

|---|---|---|---|---|---|---|

| App Name | EX | LAT | BW | EX | LAT | BW |

| RN50 | 36% | 11% | 53% | 43% | 30% | 26% |

| SSDEN34 | 55% | 4% | 40% | 58% | 28% | 14% |

| BERT | 72% | 3% | 25% | 73% | 9% | 17% |

| DLRM | 26% | 65% | 8% | 14% | 84% | 2% |

| RNN-T | 80% | 5% | 15% | 63% | 29% | 8% |

| UNET | 56% | 9% | 34% | 75% | 18% | 7% |

Based on experimental measurements of memory and tensor usage on a GPU using performance counters, we categorize operators as compute bound (EX) if they sustain more than 20% tensor usage or more than 50% SIMT usage. We categorize operators as bandwidth bound (BW) if they are not compute bound and sustain more than 50% memory bandwidth usage. Operators that are neither are latency bound (LAT). Davies et al.7 do a deep dive on the behaviors of operators found in MLPerf applications by considering these three broad categories of DL operators, focusing on what causes operators to be compute heavy, bandwidth heavy, or latency heavy and their interaction with the underlying architecture. We find that architectures can focus on these three categories of operations to achieve coverage and high performance for deep learning.

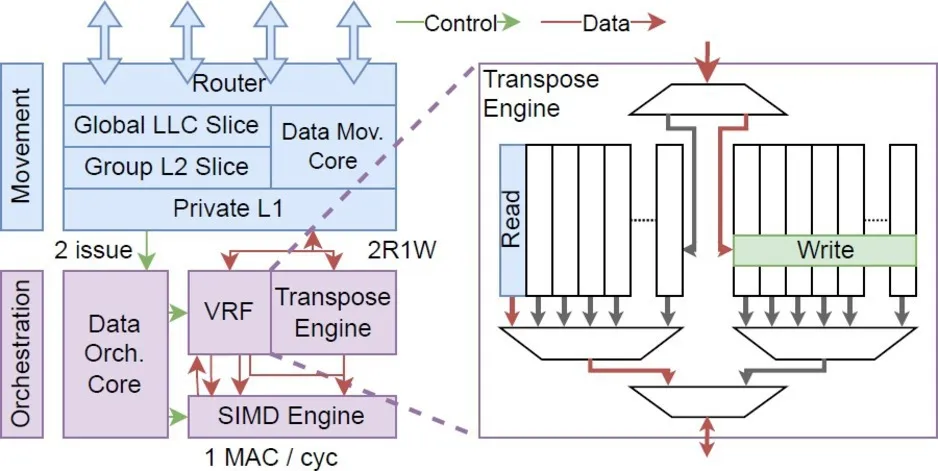

Tiled decoupled control and compute architecture. GPUs and recent academic (for example, SIMBA,34 SIGMA,27 and EyerissV24) and industry (for example, Gaudi14 and others30) works belong to a paradigm of chip architecture we call tiled decoupled control and compute (TDCC). The taxonomy and ideas developed by Nowatzki et al.22 show that architectures can be categorized along five axes: concurrency, coordination, communication, computation, and data reuse. For the TDCC family of architectures, tiling is used to exploit concurrency. Control (called coordination in the Nowatzki taxonomy) and compute are decoupled to allow an imperative programming model, while also supporting high-density compute. TDCC architectures use subtly different approaches for data-reuse and communication, outlined in Figure 2. Compared with the storage idioms explained in Pellauer et al.,26 the TDCC taxonomy encompasses a broader scope, capturing functional unit organization, communication network design, and control mechanisms.

Figure 2 shows five example TDCCs (including Galileo, discussed in detail later), with a breakdown of how each addresses the five categories of specialization. We order the architectures by increasing level of generality and application to widespread DL (training and inference). Below each design, we indicate with red text which features are not appropriately provisioned for AI inference and training. Note that these architectures are quite capable. For DL, data-reuse specialization can be further broken into data storage and data orchestration (reshaping, transposing, and other layout modifications for data structures that hardware can make efficient). The top half of the figure redraws the architectures from the original references (with some stylizing) and colors the components according to the six components of specialization. All of them exploit concurrency through repeating homogeneous tiles and use some kind of control core for coordination. Compared with a GPU, other TDCCs are more area-efficient by eliminating overheads introduced by SIMT, such as a large register file, shared-memory, and hardware such as FP64, which is not necessary for DL. Looking at DL chips through the lens of the TDCC taxonomy shows us that different compositions of architecture primitives are feasible and capable of outperforming state-of-the-art GPUs, allowing us to study the role of architecture.

What Is the Role of Technology?

We now examine our first question and answer whether an architecture built at an advanced technology node would be a factor proportional to Moore’s Law in area-density increase compared with an architecture built at an older (larger) technology node. Using Amdhal’s law and knowledge of the three categories of operators, we developed an analytical performance model. Our model is architecture and implementation agnostic, allowing us to effectively estimate the performance gap attributable to technology alone. Based on well-accepted scaling equations35 summarized in Table 1, we determine iso-power and iso-frequency scaling. For this article, we use iso-frequency scaling.

Definitions and modeling equations. Let rbw, rc, and rl denote the fraction of operators that are bandwidth heavy, compute heavy, and latency heavy, respectively. Recall that we can classify all operations into these categories, so for a given application, rbw + rc + rl = 1. Given a baseline architecture and workload details, we model speedup with the following equation:

Where rbw, rc, and rl are, as defined previously, and sbw, sc, and sl denote the speedup for bandwidth-, compute-, and latency-heavy operators, respectively, over a given baseline architecture.

We now discuss how we model the speedup that would be achieved when scaling a given architecture to a smaller technology node. As a running example, we consider modeling the speedup from an architecture built at 12nm scaled to 3nm.

Modeling bandwidth-heavy operator speedup. Bandwidth-heavy operator performance scales proportionally with memory bandwidth. Memory bandwidth can scale independent of technology (HBM2 PHYs exist even at 16nm33), so a baseline architecture and its technology-scaled counterpart would have the same available memory bandwidth. Thus, we fix sbw = 1. Benefits from narrower datatypes like FP8 and MSFP are also technology independent, reducing bandwidth needs and compute density.

Modeling compute-heavy operator speedup. We assume compute-heavy operator performance would scale proportionally to available peak compute. Considering area, peak compute is proportional to the amount of area dedicated to compute. Let areachip denote chip area and areamem denote the area occupied by HBM controllers and other peripherals. Then the compute area scale is:

To estimate sc, we observe that if we hold total area constant, the factor increase in area devoted to compute follows the area scaling factor (from 12nm to 3nm we would estimate the compute density to increase by 6.7x). A further linear compute speedup can come from frequency increase at iso-power (2.165x = delay ratio from Table 1 for 12nm to 3nm). Thus, for 12nm to 3nm, we would set sc = 6.7 x 2.165 = 14.5.

Modeling latency-heavy operator speedup. For latency-dominated operators, caches and additional chip area provide speedup—for these operators, we model their speedup as sl = (ac)y for some power γ applied to the area scale. CPUs are an example of architectures that improve latency-bound applications by exploiting ILP/MLP, where speedup grows as a square root or cube root of chip area. We consider γ ranging from 0.25 to an extreme case where γ = 1.

Our findings. Figure 3 shows the speedup given by scaling an architecture from 12nm to 3nm for two different fractions of rl (small, where rl = 0.1, and vanishing, where rl = 0.01), for a sweep of ratios for the other two regions, and three plots for γ = 0.25, 0.5, and 1. We call this speedup the technology gap that a 12nm node suffers. For context, BERT has an rc = 0.64 (ratio computed on hypothetical 3nm H100 successor that is two times faster than H100) and essentially no latency-heavy operators. Because of simply Amdahl’s Law effects, γ does not have a large impact on final speedup because rl is low in DNNs. We also observe that, when rc < 0.64, regardless of r, maximum additional speedup from technology scaling from 12nm to 3nm is 2.9x. For heavily compute-dominated workloads (0.50 ≤ rc ≤ 0.64), this gap is 2.9x for small rl , and 2.5x for vanishing rl.

Because the area gap, and hence sc and sl, are the largest for 3nm, the technology gap of 12nm to 5nm and 7nm will be lower than the 3nm gap. The computed values from running our model are shown in Table 4. From our model, we can also compute the gap from 7nm to 5nm and 3nm, simply by dividing these speedups, shown in the last four rows in the table.

| rc | rl | 12nm | 7nm | 5nm | 3nm |

|---|---|---|---|---|---|

| Technology gap of 12nm node | |||||

| 0.64 | 0.10 | 1.0 | 2.0 | 2.6 | 2.9 |

| 0.64 | 0.01 | 1.0 | 1.8 | 2.3 | 2.5 |

| 0.50 | 0.10 | 1.0 | 1.6 | 2.0 | 2.1 |

| 0.50 | 0.01 | 1.0 | 1.6 | 1.8 | 1.9 |

| Technology gap of 7nm node | |||||

| 0.64 | 0.10 | 0.5 | 1.0 | 1.3 | 1.45 |

| 0.64 | 0.01 | 0.6 | 1.0 | 1.3 | 1.4 |

| 0.50 | 0.10 | 0.6 | 1.0 | 1.25 | 1.3 |

| 0.50 | 0.01 | 0.6 | 1.0 | 1.1 | 1.2 |

Answer 1. The technology gap—that is, the benefit of technology scaling that can be used to create improvements in DL performance—is limited to 2.9x (rc = 0.64, rl = 0.1 represents LLMs/transformer models; rc = 0.5 represents many MLPerf applications) considering the benefits of 3nm technology, compared with the 12nm technology node. Considering transitioning from 7nm to 3nm technology, this gap is 1.45x for LLMs and 1.3x for the entire MLPerf suite.

Commentary. This 2.9x gap/speedup is significantly below the 8x gap expected based on traditional interpretations of Moore’s Law for three node generations. For the comprehensive set of MLPerf applications, this gap further reduces to only 2.1x. Overall, this raises profound questions about the adoption timelines of newer, more advanced nodes that the entire semiconductor industry must address, considering economics, access blockades, and sustainability.

Model validation. We validate this model in two ways, shown in Table 5. First, we consider our application and, from the characterization in Table 3, we determine values for rbw and rc (rl is always zero) for V100 execution. We then use measured values for sbw and sc, considering peak compute and peak bandwidth for the A100 and V100. From these measurements, we can determine the model’s predicted speedup of the A100 over the V100. We compare this speedup to the measured speedup from running the applications’ actual code and measuring time on the A100 compared with the V100.

| App/Shape | Measured | Predicted |

|---|---|---|

| ResNet50 | 1.7x | 2.0x |

| SSD-ResNet34 | 1.8x | 2.1x |

| BERT | 2.1x | 2.3x |

| DLRM | 1.2x | 1.8x |

| RNN-T | 2.2x | 2.4x |

| UNET | 2.1x | 2.1x |

| BW-dominated operators | ||

| 16384x128x256 | 1.6x | 1.7x |

| 32768x128x64 | 1.7x | 1.7x |

| 256x8192x4096 | 1.7x | 1.7x |

| Compute-dominated operators | ||

| 4096x2048x2048 | 2.5x | 2.6x |

| 1024x8192x2048 | 2.2x | 2.6x |

| Latency-dominated operators | ||

| 256x256x64 | 1.2x | 1.6x |

| 512x256x64 | 1.4x | 1.6x |

| 1024x256x128 | 1.3x | 1.6x |

To validate using microbenchmarks, we selected a set of GEMM shapes (whose corresponding MLP hyperparameters are also shown) that we know are bandwidth limited (very large M dimension, but small K and small N) or compute limited (very large M, N, and K). For the former, rbw = 1; rc== rl = 0. For the latter, rc = 1; rbw == rl = 0. We also use a set of operators that are latency dominated (for which we run the model with γ = 0.5). Since rl = 0 for all the other cases, the value of γ does not matter. We perform the same steps as for MLPerf: Run code on the V100, measure the r’s, project and measure speedups on the A100, and compare measured to projected.

We find our model is quite accurate for MLPerf, BW-dominated, and compute-dominated microbenchmarks. For the latency-dominated microbenchmarks, even with γ = 0.5, our model overpredicts speedup. At least for these operators, γ = 0.25 provides a good curve fit.

What Is the Role of Architecture?

We now examine our second question. In the context of domain-specific accelerators, this question can also be viewed as how architectural specialization can provide benefits independent of technology scaling. As a case study for this exploration, we introduce an example TDCC architecture, Galileo, and then compare it to current SOTA solutions.

Galileo organization. At the system level, Galileo is a fabric of tiles that contain compute elements and slices of a distributed memory hierarchy and are connected via a mesh interconnect. The system includes a global thread scheduler that transmits work to cores based on software-defined work placement. It also includes a host interface controller (PCIe-like interface) to provide high-bandwidth, low-latency communication to a general purpose host computer that runs the DL framework code. Finally, one or more memory controllers and PHY on chip (HBM is preferred) feed the on-chip memory hierarchy. The organization of the tile, memory hierarchy, and on-chip network follow.

Tile organization. Galileo’s tile organization is depicted in Figure 4. Tiles in Galileo consist of the following: 1) a general purpose core coupled with a short-vector SIMD engine, 2) a vector register file (VRF), and 3) a slice of a distributed, three-level memory hierarchy. The SIMD engine is designed to efficiently execute both matrix-multiply and element-wise operations, where each lane supports two Int8 and one FP16 multiply-accumulate operations per cycle. For dot-product operations on integer data, as found in matrix-multiply, a number of adjacent multiply-accumulate operations are combined into a wider output (for example, 4 Int8 MACs → 1 Int32). The effects of wide-accumulation and transposed matrix layouts is accommodated with a small custom “transpose unit” that buffers cache lines read from memory until full vectors can be written to the VRF. For element-wise operators, the SIMD engine alone is sufficient.

Memory hierarchy. Each tile has a private L1 cache designed to supply the high-bandwidth needed from the SIMD engine. Galileo also includes a distributed L2 cache shared among a cluster of tiles, and a globally distributed LLC shared by all tiles. The storage hierarchy is augmented with a small programmable core in each tile, allowing the local cache slices to “push” data to recipient tiles, effectively eliminating request traffic from the interconnect. Operator-specific information provided by DL frameworks enables static-analysis techniques to easily extract out dynamic data-movement needs, allowing this “push” style of data movement to be generated automatically by an operator compiler.

On-chip network. Tiles are connected via a simple 2D-mesh topology with dimension-ordered routing. The network allows data-movement cores within a tile to multicast data with a destination bitmask, enabling reuse of network hops that would otherwise be repeated for the same data to reach multiple destinations. The three-level cache hierarchy reduces the number of destinations at each level of transmission, allowing the bitmasks to fit within the packet header for each cache line of data.

Software stack. Galileo adheres to the best practices that modern DL stacks have converged to: a layered approach to expose DL hardware to applications18 comprising a framework-level parser, IR, operator mapping, and fusion optimization. Based on learnings from TimeLoop24 and MEASTRO,17 Galileo adopts output-stationary dataflow as a baseline strategy. For matrix-multiply and convolution, this lends to an easy-to-understand parallel algorithm since it does not use inter-thread communication—instead leaning on Galileo’s “push” data movement and dynamic multicast to exploit load-reuse opportunities, and the transpose engine to efficiently use the SIMD engine. In practice, we observe output-stationary to afford high performance. More complex tuning of dataflow patterns could be considered, and our performance results for Galileo can be viewed as a floor on what is possible via software tuning. Work tiles are sized to optimize for arithmetic intensity, respecting minimum tiling parameters to fill the SIMD vector width and L1 cache capacity. For each operator, we develop a kernel template that handles a single output work tile.

Understanding the design space. Considering EyerissV2, Galileo primarily changes the compute units within the PEs, which are overspecialized for convolution operations to general-purpose SIMD units, and uses a dynamic (packet-switched) NoC to enable flexible communication. Simba is nearly identical to Galileo, with the main differences being the inclusion of the transpose engine to facilitate highly efficient intra-PE data orchestration and the generalization of specialized buffers to hardware-managed caches and push-based network communication. Sigma is also quite similar to Galileo but is very over-provisioned for data orchestration within the PE. Instead of expensive, software-programmable Benes and FAN networks for shuffling data to SIMD lanes, Galileo uses the transpose engine, which is sufficiently flexible for DL operators. Compared with a GPU, Galileo specializes data reuse by eliminating register file, SIMT execution in favor of the transpose engine. We specialize control by using a simple in-order core. For compute, we use SIMD execution with data-transpose. Instead of short-vector SIMD with data-shuffle operations, GPUs achieve efficient intra-PE matrix-multiply with systolic matrix-multiply-accumulate engines (for example, TensorCores).

Empirical methodology. To evaluate the role of architecture, we need more detailed treatment of workloads and the actual architecture itself compared with the question of technology scaling. We detail this methodology below.

Technology modeling. Similar to the technology scaling study, we use well-accepted scaling equations35 (summarized in Table 1) and use iso-frequency scaling.

Architecture performance modeling. We insert region markers into TensorFlow and PyTorch at the operator boundary to generate an operator trace from our selected applications. We build a trace-based performance model that accounts for intra-tile operation, prefetch and data movement between tiles, and data movement from external HBM to on-chip memory. The core model accounts for the interaction between SIMD, transpose, load, and store instructions. The network model counts network hops at a cache-line granularity and uses bandwidth per link to estimate latency. We model external memory traffic with a simple roofline formula. Works including TimeLoop24 and MAESTRO17 offer an analytical model for the performance of TDCC-like architectures, though we found them to be insufficient for capturing the effects of dynamic cache state and interconnect topology and contention.

Architecture power and area estimation. We use the methodology in Accelergy39 and TimeLoop for our area and power modeling. We additionally use data for the Ara core3 for modeling SIMD compute units and the LX3 core from Nowatzki et al.22 for modeling an in-order core. We use the arithmetic units from Mach et al.20 as a reference FP16 to complement Accelergy’s modeling. Finally, CACTI is used for power and area estimates of the last-level cache. All of these components are scaled to a target technology node using the methods from Stillmaker and Baas.35 The power consumption of the memory controllers and PHY and HBM stacks is 6W per stack based on data sheets for HBM2. Our frequency-scaling parameters are derived from ARM’s published industry state of the art.5

Baseline and comparing architecture performance. To report our results, we obtained published performance results of the Nvidia A100 system. We paid close attention to ensure that we were using the exact same DL model as the Nvidia system. For some applications, we used Nvidia’s code published through MLPerf to replicate performance results and obtain results for different batch sizes.21,32 For BERT-large pre-training, we were unable to run Nvidia’s official code on a single GPU, so we used the ratio of large-batch inference to small-batch inference to estimate small-batch pre-training. To obtain layer-level performance and efficiency, we ran individual operators with PyTorch on an A100 and collected runtime (latency) for each layer using Nvidia’s NSYS, computing utilization with FLOP counts for each layer. To get the H100’s performance, we used the most recently published results from Nvidia, which report speedups over the A100.32

Galileo design space. We first conducted an in-depth analysis of the design space of Galileo that is created over the parameters of SIMD-width, number of processing cores, and frequency. We plotted all of the design points in our space in terms of their area efficiency (TOPS/mm2) and power efficiency (pJ/op). Figure 5 shows the results of this survey. We can see that there is quite a diverse spread of design points that vary by up to a factor of 5x in terms of area efficiency, and 6x in terms of power efficiency. Further, we observe that not all applications agree on which design point is the most efficient. This shows that Galileo has a diverse design space that can provide good power and area efficiency for DL training and inference.

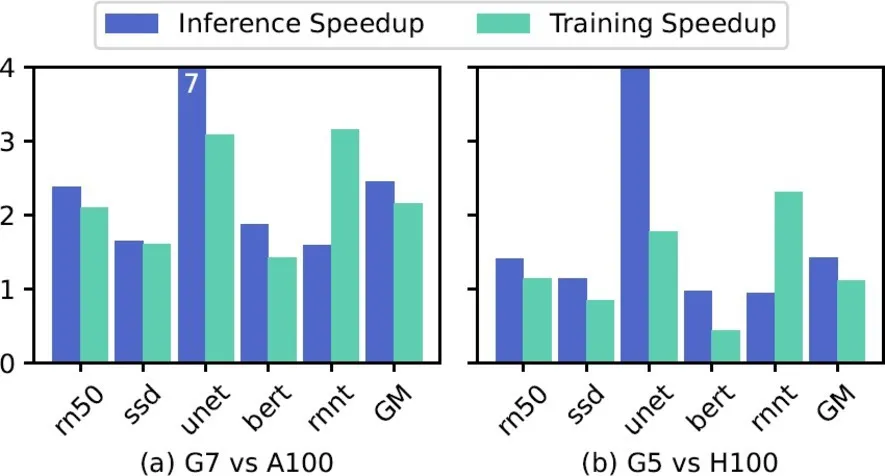

Our findings. We hold technology at 12nm for Galileo and quantify to what extent architectural changes alone can provide performance benefits by comparing different variants of Galileo to Nvidia’s SOTA A100 and H100 chips. Building on the insights from our design-space exploration, we chose two configurations of Galileo, which we call G7 and G5—both 12nm—that are designed to compete with the 7nm A100 and 5nm H100 GPUs, and evaluate their performance and efficiency. Note here that the Galileo architecture is built at 12nm, while the A100 and H100 are at 7nm and 5nm, respectively, thus isolating the role of architecture. Our evaluation is on the workloads from the entire MLPerf suite.

Matching 7nm performance with architectural specialization at 12nm. First, we consider the G7 Galileo implementation against an Nvidia A100. Our G7 configuration comprises 2048 cores with 512-bit SIMD vectors. Table 6 shows a comparison of the G7 to the A100. G7 is sized to (roughly) match the compute capability and memory capacity of an A100—its tile structure, on-chip network, and memory hierarchy are then determined through our DSE to achieve a balanced design. In particular, we observe Galileo’s bandwidth needs are much lower, allowing a slower HBM clock frequency.

| Spec | G7 | A100 |

|---|---|---|

| Tech Node | 12nm | 7nm |

| Die Area | 498mm2 | 840mm2 |

| Peak TOPS (Int8/FP16) | 628/324 | 624/312 |

| Power (TDP) | 200W | 250W |

| Frequency | 2.4GHz | 1.4GHz |

| Total L1/L2/LLC Size | 64/32/32MB | 20.25/-/40MB |

| HBM2 Memory | 16GB | 40GB |

| # HBM2 Stacks | 2 | 5 |

| GM pJ/op | 0.38 | 2.8 |

| Note: We rename Nvidia’s L2 to LLC to compare with Galileo. | ||

As seen in the design-space exploration, Galileo is able to run efficiently at a smaller batch size than the A100 by being specialized with efficient mechanisms for memory transfers. Since GPUs need very high batch sizes to exploit parallelism, they end up suffering from higher bandwidth needs as well. We measure performance in terms of samples/second and, to be fair and generous to the GPU, pick a batch size that provides the highest samples/second.

Figure 6a shows the performance comparison. Overall, the G7 achieves 2.5x/2.2x geo-mean speedup for inference/training with 7x/6x power efficiency. We intentionally picked a design point with roughly the same peak compute as the A100 to make it clear that the speedup we see with G7 comes from improving the utilization of compute resources by the same factor, with almost an order of magnitude lower energy. The energy efficiency of the G7 is much higher because we rely on power- and area-efficient microarchitecture targeted to DL exploiting reuse vs. a GPU that must adhere to a throughput-optimized execution model that constantly moves values in and out of main memory.

Matching 5nm performance with architectural specialization at 12nm. We defined the G5 configuration (also built at 12nm) to match the compute capacity of the H100. We provisioned its bandwidth and memory hierarchy to be balanced and sufficient for the compute. Table 7 compares the G5 to the H100. When sized to match compute capacity of the H100, the G5 exceeds the reticle limit die area of 830mm2. When this area limit is enforced, a balanced G5 design is only able to achieve a peak of 590 FP16 TOPS compared with the H100’s 756.

| Spec | G5 | H100 |

|---|---|---|

| Tech Node | 12nm | 5nm |

| Die Area | 830mm2 | 840mm2 |

| Peak TOPs (Int8/FP16) | 1180/590 | 1513/756 |

| Power (TDP) | 350W | 350W |

| Frequency | 2.4GHz | 1.4GHz |

| Total L1/L2/LLC Size | 120/60/32MB | 29/-/50MB |

| HBM2e Memory | 32GB | 80GB |

| # HBM2e Stacks | 4 | 5 |

| GM pJ/op | 0.53 | 1.9 |

| Note: We rename Nvidia’s L2 to LLC to compare with Galileo. | ||

Similar to the G7 vs. the A100, Figure 6b shows the performance comparison. Overall, the G5 achieves 1.4x/1.1x geo-mean speedup for inference/training with 3.8x/3.0x power efficiency. We observe that due to the limited area (and performance) of the G5 compared with the H100, Galileo is not able to achieve a similar performance improvement compared with the G7 vs. the A100. Because of the H100’s technology scaling, it is also able to achieve better power efficiency for its compute compared with the A100, resulting in the relatively lower power efficiency improvement of the G5 over the H100. We note that the G5 is worse than the H100 on BERT training—the H100’s performance is from arithmetic specialization of transparent FP8 conversion (sustaining 6.7x speedup over the A100 base31,32), specialization that could also transparently help the G5 and is unrelated to transistor scaling.

Answer 2. Architecture—hardware specialization—can provide a 2x savings in bandwidth and 1.7x savings in area, translating into tangible performance. Specifically, a 12nm Galileo implementation can meaningfully outperform a 7nm A100. Considering the 5nm H100, a Galileo implementation can modestly outperform the 5nm H100. As transistors become more plentiful, the raw compute becomes harder and harder to match with architectural specialization. Recall, however, that we are seeing diminishing improvements in area and power scaling.

Related Work

Technology projection. Fuchs et al.9 present a limit study of chip specialization, separating out contributions from technology scaling and architectural gains,11 but the paper lacks concrete treatment for deep learning. The Dark Silicon work from nearly a decade ago alluded to the limits of technology-scaling benefits (but primarily considering parallelism levels in applications). Dally et al. discuss how domain-specific accelerators enable high performance by carefully considering memory movement and leveraging parallelism inherent in target algorithms.6 Khazraee et al.15 primarily focus on optimizing total cost of ownership (TCO) through specialization in older technology nodes (ranging from 250nm to 16nm) for workloads like Bitcoin mining, video transcoding, and deep learning. In contrast, we focus on the performance impact of specialization and technology for DL at nodes below 12nm.

Role of architecture. There have been recent works on SIMD, in particular, looking at AVX extensions. These include an analysis of convolution performance12 and an analysis of inference on CPUs.19 Reuther et al.30 present a survey of DL accelerators. These works do not look at the details of program behavior or the contribution of architecture or technology scaling. MAGNet37 is an RTL generator that optimizes a parameterized Simba-like architecture for a single application.

Conclusion

In light of the paradigm shifts confronting the semiconductor industry, our study revisits specialization within the context of advanced technology nodes becoming increasingly inaccessible due to economic considerations, sustainability concerns, and geopolitical strategies.

Our findings reveal that a 12nm specialized chip can match the performance of SOTA 5nm chips for deep-learning tasks, challenging the impact of technology blockades. The benefits of scaling from 12nm to 3nm nodes show diminishing returns, with a maximum improvement of 2.9x for LLMs, and 1.45x scaling from 7nm to 3nm; for MLPerf this is even lower—2.1x and 1.3x, respectively.

Workloads could change substantially, impacting both our findings. Disruptive technology solutions such as in-memory and analog processing are outside our study’s scope. Sophisticated DVFS techniques could alter the energy/performance trade-offs. Challenges in software development represent a significant hurdle18,28 for new architectures to demonstrate efficacy or surpass GPUs, yet the economic dynamics may shift favorably in contexts where alternatives are scarce,13 suggesting a potential for investment and innovation in specialized architectures globally.

In conclusion, while we acknowledge the ongoing relevance of miniaturization, our analysis underscores the underlooked transformative potential of specialization. Its substantial contribution to the economics and efficiency of sustaining a Moore’s Law’s cadence of improvements highlights specialization as a critical factor in navigating the semiconductor industry’s future. This approach could redefine the economic landscape of semiconductor manufacturing, making specialization not just a complementary strategy but also a central pillar in achieving significant advances amid the diminishing returns from new technology nodes.

Join the Discussion (0)

Become a Member or Sign In to Post a Comment